Helm, its one amazing piece of software that I use multiple times per day!

What is Helm?

You can think of helm as a package manager for kubernetes, but in fact its much more than that.

Think about it in the following way:

- Kubernetes Package Manager

- Way to templatize your applications (this is the part im super excited about)

- Easy way to install applications to your kubernetes cluster

- Easy way to do upgrades to your applications

- Websites such as artifacthub.io provides a nice interface to lookup any application an how to install or upgrade that application.

How does Helm work?

Helm uses your kubernetes config to connect to your kubernetes cluster. In most cases it utilises the config defined by the KUBECONFIG environment variable, which in most cases points to ~/kube/config.

If you want to follow along, you can view the following blog post to provision a kubernetes cluster locally:

Once you have provisioned your kubernetes cluster locally, you can proceed to install helm, I will make the assumption that you are using Mac:

1

| |

Once helm has been installed, you can test the installation by listing any helm releases, by running:

1

| |

Helm Charts

Helm uses a packaging format called charts, which is a collection of files that describes a related set of kubernetes resources. A sinlge helm chart m ight be used to deploy something simple such as a deployment or something complex that deploys a deployment, ingress, horizontal pod autoscaler, etc.

Using Helm to deploy applications

So let’s assume that we have our kubernetes cluster deployed, and now we are ready to deploy some applications to kubernetes, but we are unsure on how we would do that.

Let’s assume we want to install Nginx.

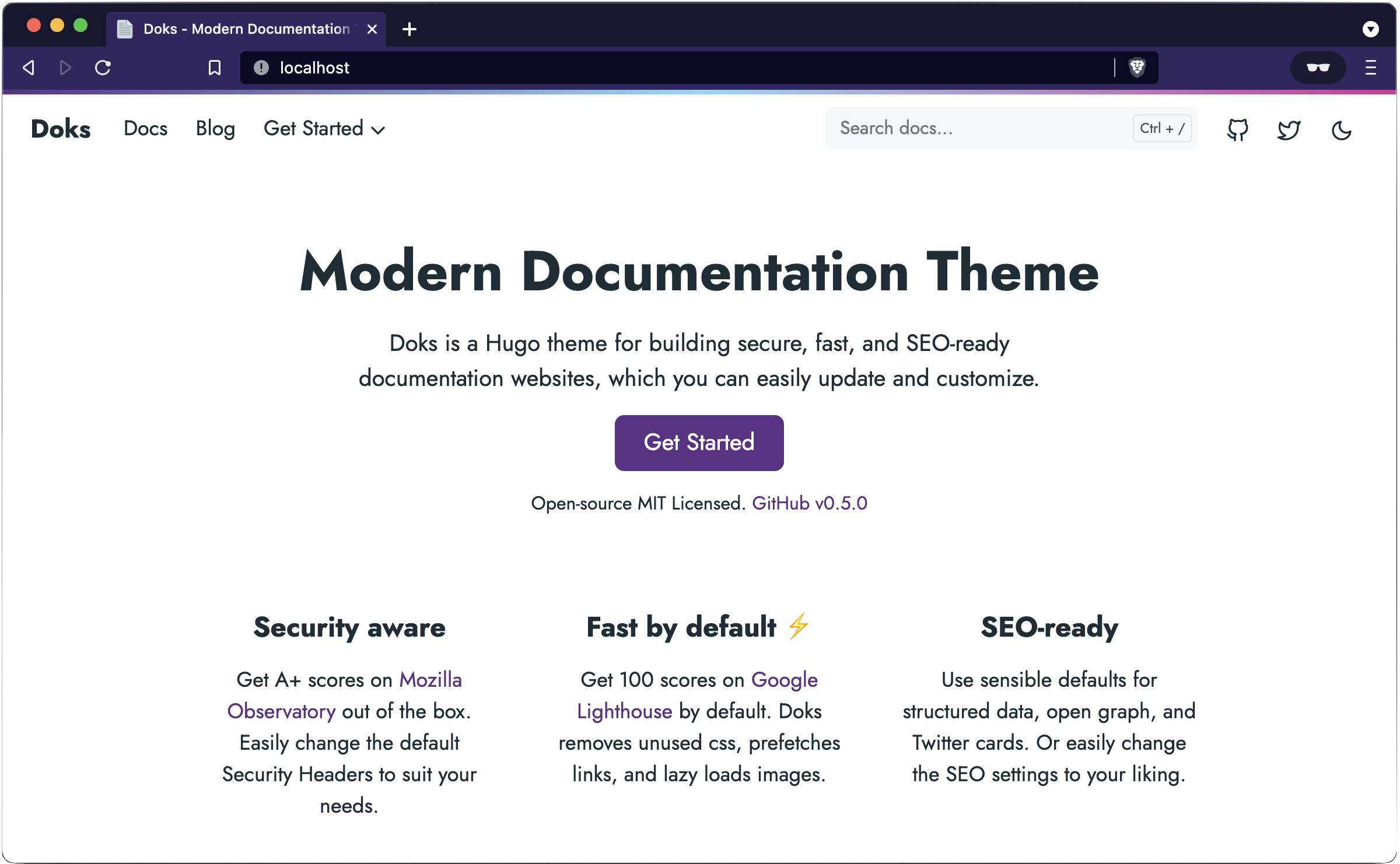

First we would navigate to artifacthub.io, which is a repository that holds a bunch of helm charts and the information on how to deploy helm charts to our cluster.

Then we would search for Nginx, which would ultimately let us land on:

On this view, we have super useful information such as how to use this helm chart, the default values, etc.

Now that we have identified the chart that we want to install, we can have a look at their readme, which will indicate how to install the chart:

1 2 | |

But before we do that, if we think about it, we add a repository, then before we install a release, we could first find information such as the release versions, etc.

So the way I would do it, is to first add the repository:

1

| |

Then since we have added the repository, we can update our repository to ensure that we have the latest release versions:

1

| |

Now that we have updated our local repositories, we want to find the release versions, and we can do that by listing the repository in question. For example, if we don’t know the application name, we can search by the repository name:

1

| |

In this case we will get an output of all the applications that is currently being hosted by Bitnami.

If we know the repository and the release name, we can extend our search by using:

1

| |

In this case we get an output of all the Nginx release versions that is currently hosted by Bitnami.

Installing a Helm Release

Now that we have received a response from helm search repo, we can see that we have different release versions, as example:

1 2 3 | |

For each helm chart, the chart has default values which means, when we install the helm release it will use the default values which is defined by the helm chart.

We have the concept of overriding the default values with a yaml configuration file we usually refer to values.yaml, that we can define the values that we want to override our default values with.

To get the current default values, we can use helm show values, which will look like the following:

1

| |

That will output to standard out, but we can redirect the output to a file using the following:

1

| |

Now that we have redirected the output to nginx-values.yaml, we can inspect the default values using cat nginx-values.yaml, and any values that we see that we want to override, we can edit the yaml file and once we are done we can save it.

Now that we have our override values, we can install a release to our kubernetes cluster.

Let’s assume we want to install nginx to our cluster under the name my-nginx and we want to deploy it to the namespace called web-servers:

1

| |

In the example above, we defined the following:

upgrade --install- meaning we are installing a release, if already exists, do an upgrademy-nginx- use the release namemy-nginxbitnami/nginx- use the repository and chart named nginx--values nginx-values.yaml- define the values file with the overrides--namespace web-servers --create-namespace- define the namespace where the release will be installed to, and create the namespace if not exists--version 13.2.22- specify the version of the chart to be installed

Information about the release

We can view information about our release by running:

1

| |

Creating your own helm charts

It’s very common to create your own helm charts when you follow a common pattern in a microservice architecture or something else, where you only want to override specific values such as the container image, etc.

In this case we can create our own helm chart using:

1 2 3 | |

This will create a scaffoliding project with the required information that we need to create our own helm chart. If we look at a tree view, it will look like the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 | |

This example chart can already be used, to see what this chart will produce when running it with helm, we can use the helm template command:

1 2 | |

The output will be something like the following:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

In our example it will create a service account, service, deployment, etc.

As you can see the spec.template.spec.containers[].image is set to nginx:1.16.0, and to see how that was computed, we can have a look at templates/deployment.yaml:

As you can see in image: section we have .Values.image.repository and .Values.image.tag, and those values are being retrieved from the values.yaml file, and when we look at the values.yaml file:

1 2 3 4 5 | |

If we want to override the image repository and image tag, we can update the values.yaml file to lets say:

1 2 3 4 | |

When we run our helm template command again, we can see that the computed values changed to what we want:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Another way is to use --set:

1 2 3 4 5 6 7 8 | |

The template subcommand provides a great way to debug your charts. To learn more about helm charts, view their documentation.

Publish your Helm Chart to ChartMuseum

ChartMuseum is an open-source Helm Chart Repository server written in Go.

Running chartmuseum demonstration will be done locally on my workstation using Docker. To run the server:

1 2 3 4 5 6 7 | |

Now that ChartMuseum is running, we will need to install a helm plugin called helm-push which helps to push charts to our chartmusuem repository:

1

| |

We can verify if our plugin was installed:

1 2 3 | |

Now we add our chartmuseum helm chart repository, which we will call cm-local:

1

| |

We can list our helm repository:

1 2 3 | |

Now that our helm repository has been added, we can push our helm chart to our helm chart repository. Ensure that we are in our chart repository directory, where the Chart.yaml file should be in our current directory. We need this file as it holds metadata about our chart.

We can view the Chart.yaml:

1 2 3 4 5 6 | |

Push the helm chart to chartmuseum:

1 2 3 | |

Now we should update our repositories so that we can get the latest changes:

1

| |

Now we can list the charts under our repository:

1 2 3 | |

We can now get the values for our helm chart by running:

1

| |

This returns the values yaml that we can use for our chart, so let’s say you want to output the values yaml so that we can use to to deploy a release we can do:

1

| |

Now when we want to deploy a release, we can do:

1

| |

After the release was deployed, we can list the releases by running:

1

| |

And to view the release history:

1

| |

Resources

Please find the following information with regards to Helm documentation: - helm docs - helm cart template guide

If you need a kubernetes cluster and you would like to run this locally, find the following documentation in order to do that: - using kind for local kubernetes clusters

Thank You

Thanks for reading, feel free to check out my website, feel free to subscribe to my newsletter or follow me at @ruanbekker on Twitter.

- Linktree: https://go.ruan.dev/links

- Patreon: https://go.ruan.dev/patreon